In the previous post, we introduced the pre-print of our review of R packages for movement. R is one of the most used programming softwares to process, visualize and analyze data from tracking devices. The large amount of existing packages makes it difficult to keep track of the spectrum of choices. Our review aimed at an objective introduction to the packages organized by the type of processing or analyzing they focused on, and to provide feedback to developers from a user perspective. For the second objective, we elaborated a survey for package users regarding:

- How popular those packages are;

- How well documented they are;

- How relevant they are for users.

In the review we showed results regarding package documentation. In this post you’ll find the complete results of the survey.

Packages included in the survey

In theory, any package could be potentially useful for movement analysis; either a time series package, a spatial analysis one or even ggplot2 to make more beautiful graphics! For the review, we considered only what we referred to as tracking packages. Tracking packages were those created to either analyze tracking data (i.e. \((x,y,t)\)) or to transform data from tagging devices into proper tracking data. For instance, a package that would use accelerometer, gyroscope and magnetometer data to reconstruct an animal’s trajectory via path integration, thus transforming those data into an \((x,y,t)\) format, would fit into the definition. But a package analyzing accelerometry series to detect changes in behavior would not fit.

For this survey, we added packages that, though not analyzing tracking data, were created to process or analyze data extracted from tracking devices in other formats (e.g. accelerometry for accelerometry data, diveMove for time-depth recorders or pathtrackr for video tracking data). A couple of packages that, were finally discarded from the review because of either being in early stages of development (movement) or because of being archived in CRAN due to unfixed problems (sigloc), were included in the survey.

A total of 72 packages were included in this survey: acc, accelerometry, adehabitatHR, adehabitatHS, adehabitatLT, amt, animalTrack, anipaths, argosfilter, argosTrack, BayesianAnimalTracker, BBMM, bcpa, bsam, caribou, crawl, ctmcmove, ctmm, diveMove, drtracker, EMbC, feedR, FLightR, GeoLight, GGIR, hab, HMMoce, Kftrack, m2b, marcher, migrateR, mkde, momentuHMM, move, moveHMM, movement, movementAnalysis, moveNT, moveVis, moveWindSpeed, nparACT, pathtrackr, pawacc, PhysicalActivity, probgls, rbl, recurse, rhr, rpostgisLT, rsMove, SDLfilter, SGAT/TripEstimation, sigloc, SimilarityMeasures, SiMRiv, smam, SwimR, T-LoCoH, telemetr, trackdem, trackeR, Trackit, TrackReconstruction, TrajDataMining, trajectories, trip, TwGeos/BAStag, TwilightFree, Ukfsst/kfsst, VTrack and wildlifeDI.

Packages from any public repository (e.g. CRAN, GitHub, R-forge) were included in the survey. Packages created for eye, computer-mouse or fishing vessel movement were not considered here (but you are welcome to make your own survey about them!).

Note: trajr was added to the survey in a late stage, but because of that, and the fact that it got only one response, we filtered it out of the analysis.

Participation in the survey

The survey was designed to be completely anonymous, meaning that we had no way to know who participated and not even the date of participation was saved. There was no previous selection of the participants and no probabilistic sampling was involved. The survey was advertised by Twitter, mailing lists (r-sig-geo and r-sig-ecology), individual emails to researchers and the mablab website.

The survey got exemption from the Institutional Review Board at University of Florida (IRB02 Office, Box 112250, University of Florida, Gainesville, FL 32611-2250).

A total of 446 people participated in the survey, and 233 answered all four questions. To answer all questions the participant had to have tried at least one of the packages. In the following sections, we analyze only completed surveys.

The questions

User level

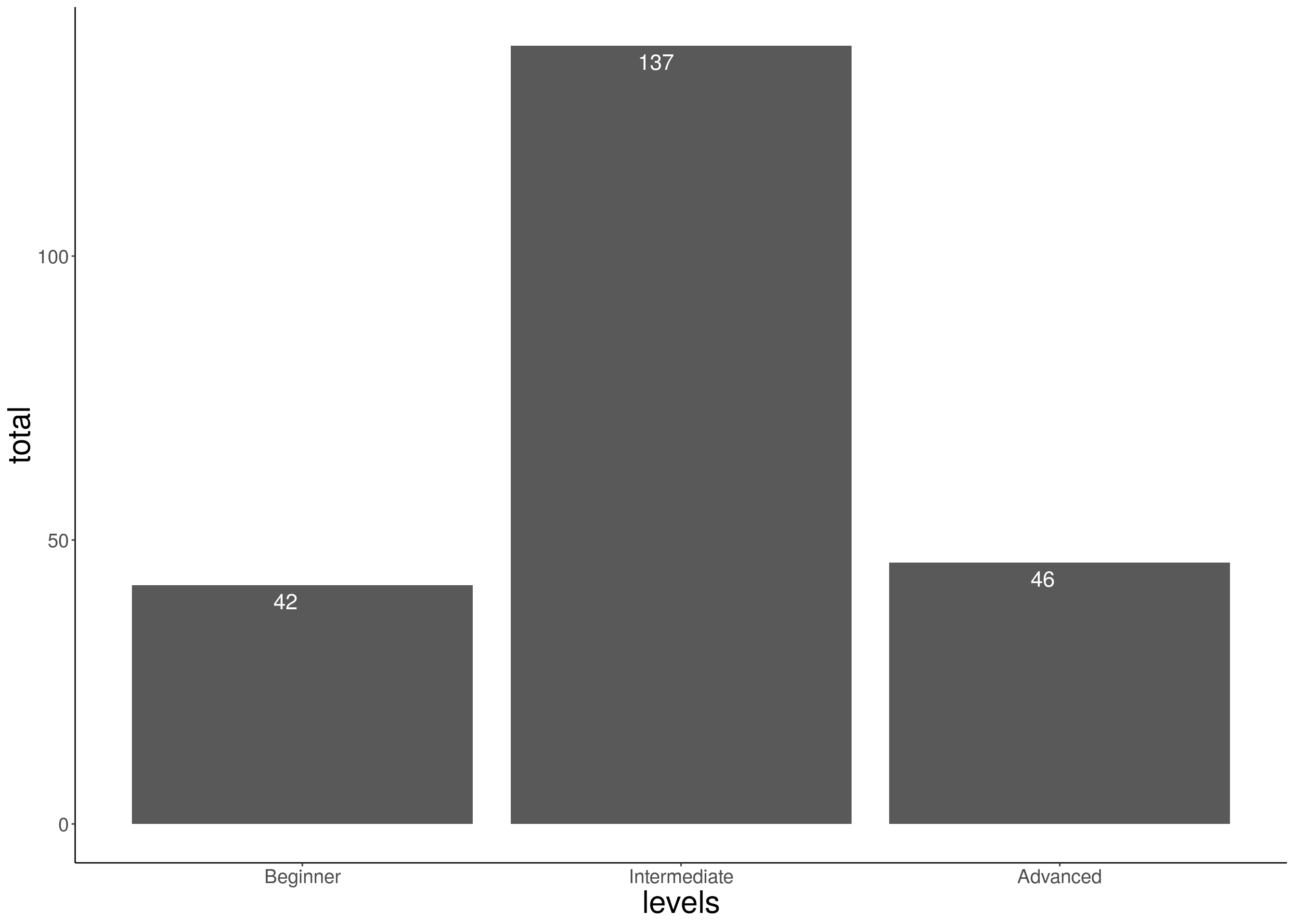

Let’s see first the level of use in R of the participants. The options were:

- Beginner: You only use existing packages and occasionally write some lines of code.

- Intermediate: You use existing packages but you also write and optimize your own functions.

- Advanced: You commonly use version control or contribute to develop packages.

Figure 1: Level of R use

Most participants considered themselves in an intermediate level (60.9 %), meaning that they could write functions in R. Some others were beginners (18.7 %) and advanced (20.4 %) R users.

Package use

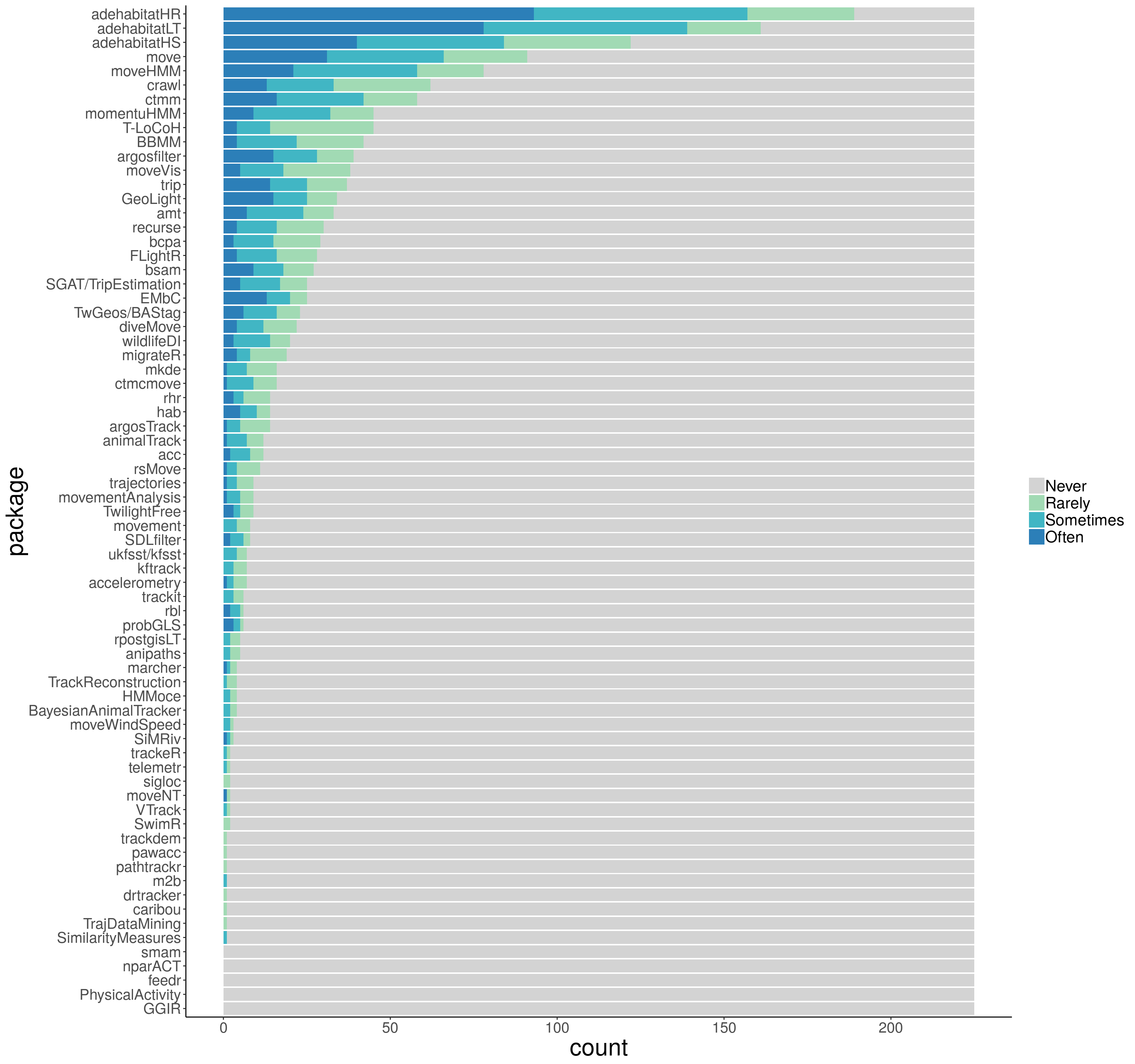

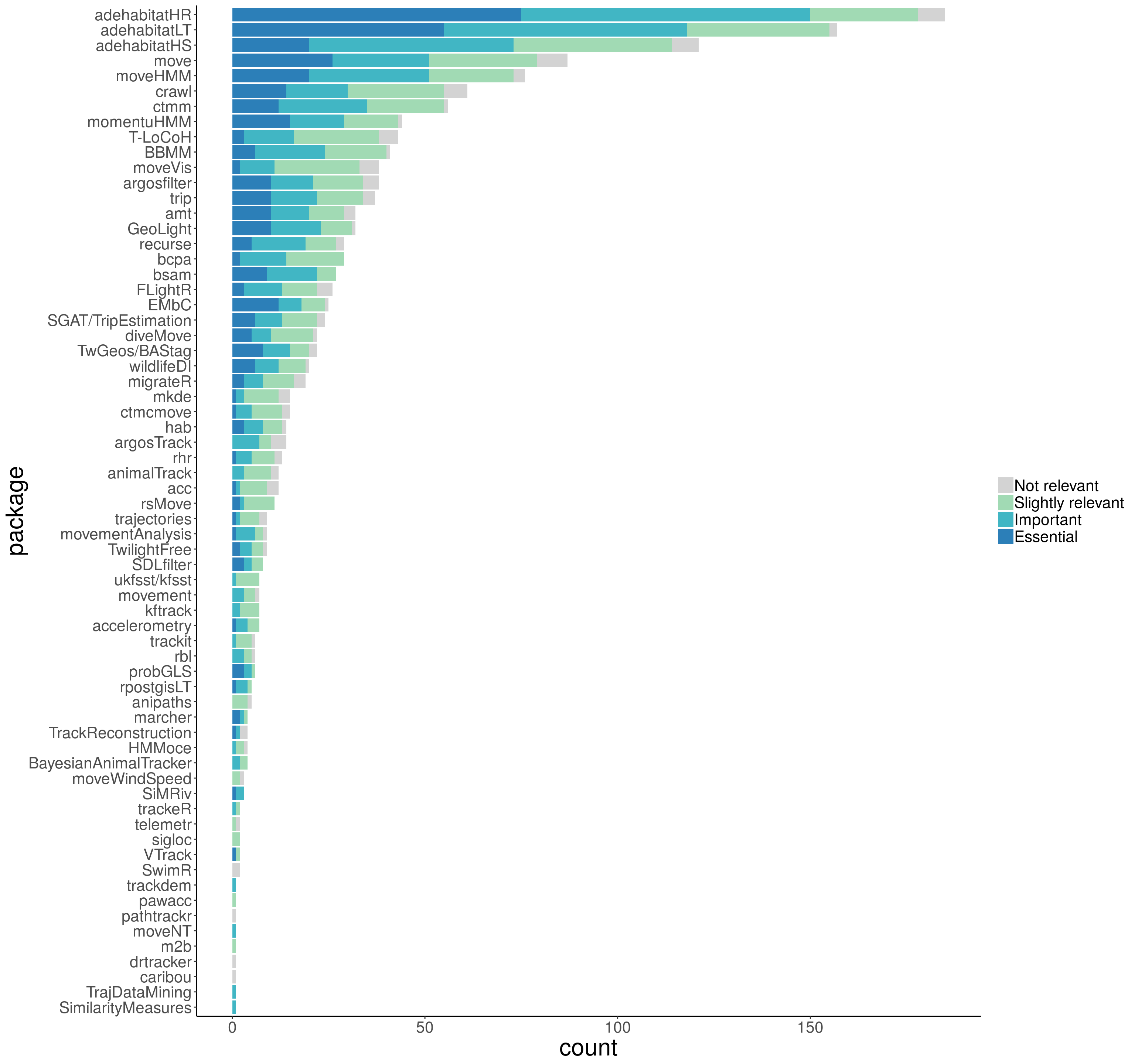

The first question about package use was: How often do you use each of these packages? (Never, Rarely, Sometimes, Often)

The bar graphics below show that most packages were unknown (or at least had never been used) by the survey participants. The adehabitat packages (HR, LT and HS) were the most used packages. These packages provide a collection of tools to estimate home range, perform simple trajectory analysis and analyze habitat selection, respectively. On the bottom of the graphic, smam (for animal movement models), PhysicalActivity, nparACT, GGIR (these three for accelerometry data on human patients) and feedr (to handle radio telemetry data) had no users among the participants. For that reason, those 5 packages will not appear in the analysis of the next questions.

Figure 2: Package use

If you want to check the numbers for your favorite package, the complete table is here

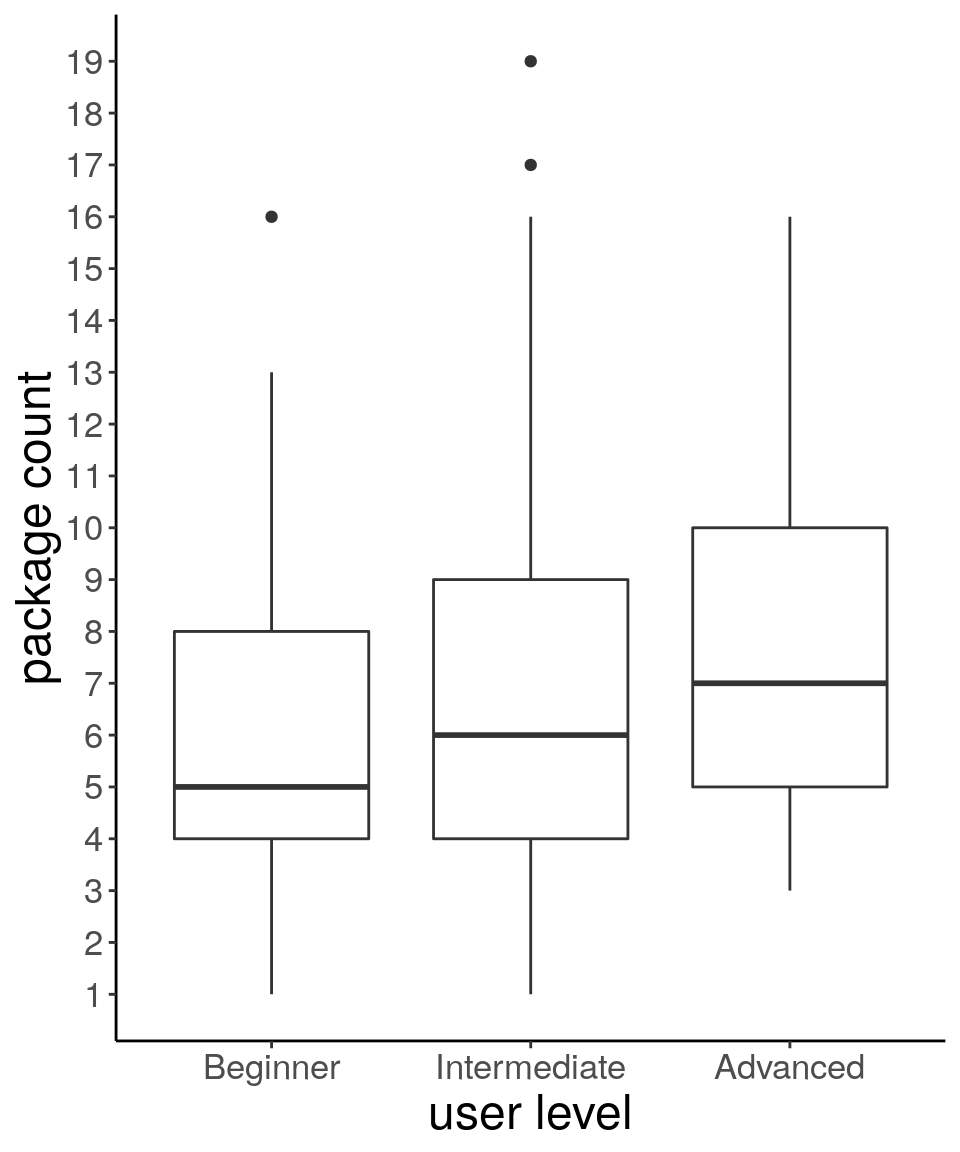

There is actually not much difference in the number of packages used by the distinct levels of R users as you can see in the boxplots below:

Figure 3: Packages per user level

Package documentation

Without proper user testing and peer editing, package documentation can lead to large gaps of understanding and limited usefulness of the package. If functions and workflows are not expressly defined, a package’s capacity to help users is undermined.

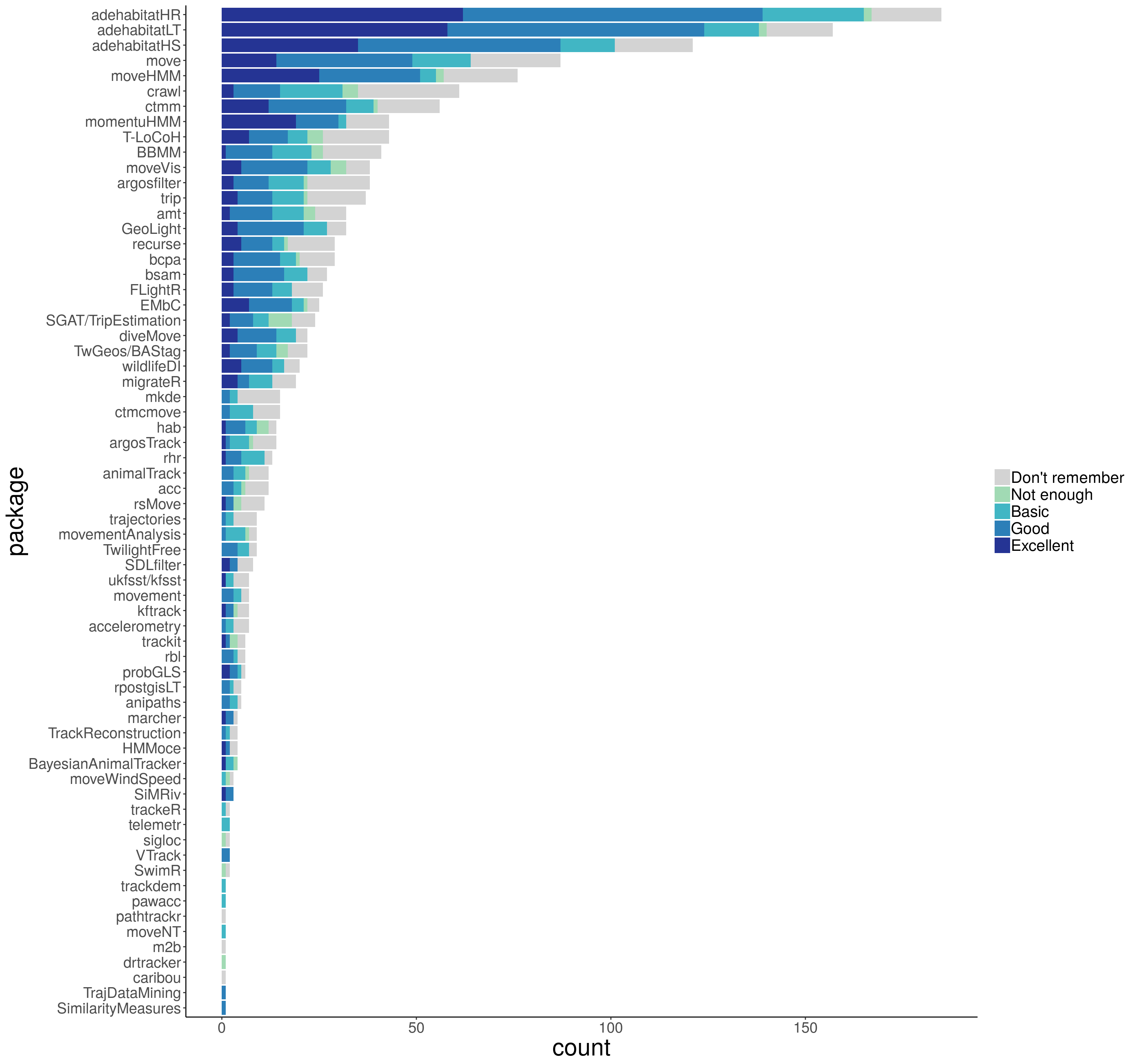

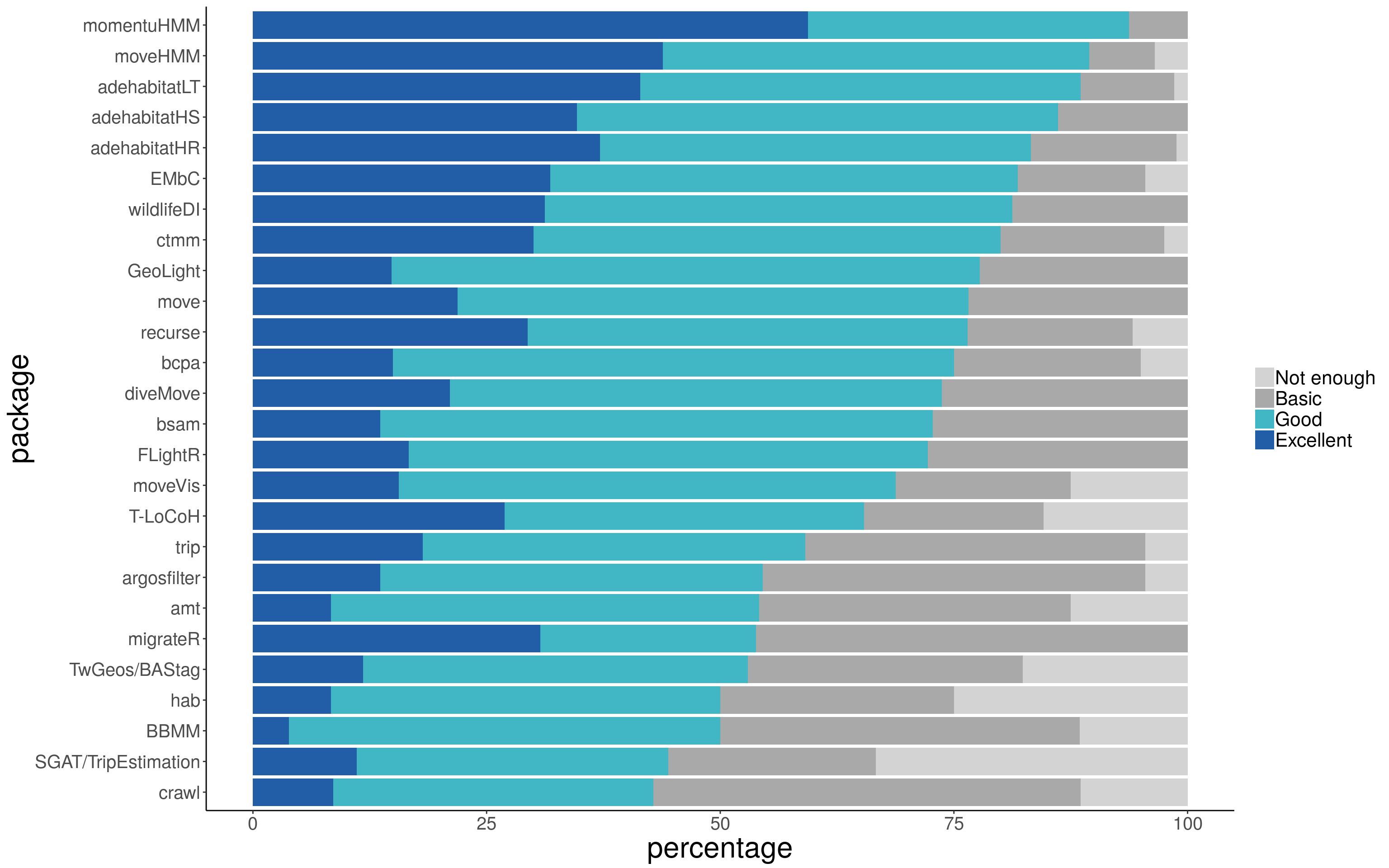

In this survey we asked the participants how helpful was the documentation provided for each of the packages they stated to use. Documentation includes what is contained in the manual and help pages, vignettes, published manuscripts, and other material about the package provided by the authors. The participants had to answer using one of the following options:

- Not enough: “It’s not enough to let me know how to do what I need”

- Basic: “It’s enough to let me get started with simple use of the functions but not to go further (e.g. use all arguments in the functions, or put extra variables)”

- Good: “I did everything I wanted and needed to do with it”

- Excellent: “I ended up doing even more than what I planned because of the excellent information in the documentation”

- Don’t remember: “I honestly can’t remember…”

Figure 4: Bar plots of absolute frequency of each category of package documentation

Remember that participants could only give their opinion on documentation regarding the packages they had used. Hence, the packages with many users got many documentation answers (Fig. 1 and 4). Figure 5 allows for a closer look at the proportion of type of response for each package.

To identify some packages with remarkably good documentation, let’s first only consider those packages with at least 10 responses on the quality of documentation (regardless of the “Don’t remember”). These are 27 (you can see the table of responses here ). Among them, momentuHMM had more than 50% of the responses (59.38%; 19) as “excellent documentation”, meaning that the documentation was so good that thanks to it, more than half of its users discovered additional features of the package and were able to do more analyses than what they initially planned. Moreover, 11 packages had more than 75% of the responses as either “good” or “excellent”: momentuHMM (93.75%; 30), moveHMM (89.47%; 51), adehabitatLT (88.57%; 124), adehabitatHS (86.14%; 87), adehabitatHR (83.23%; 139), EMbC (81.82%; 18), wildlifeDI (81.25%; 13), ctmm (80%; 32), GeoLight (77.78%; 21), move (76.56%; 49), recurse (76.47%; 13). The two leading packages, momentuHMM and moveHMM, focus on the use of Hidden Markov models which allow identifying different patterns of behavior called states.

Figure 5: Bar plots of relative frequency of each category of package documentation (for packages with more than 5 users)

Package Revelance

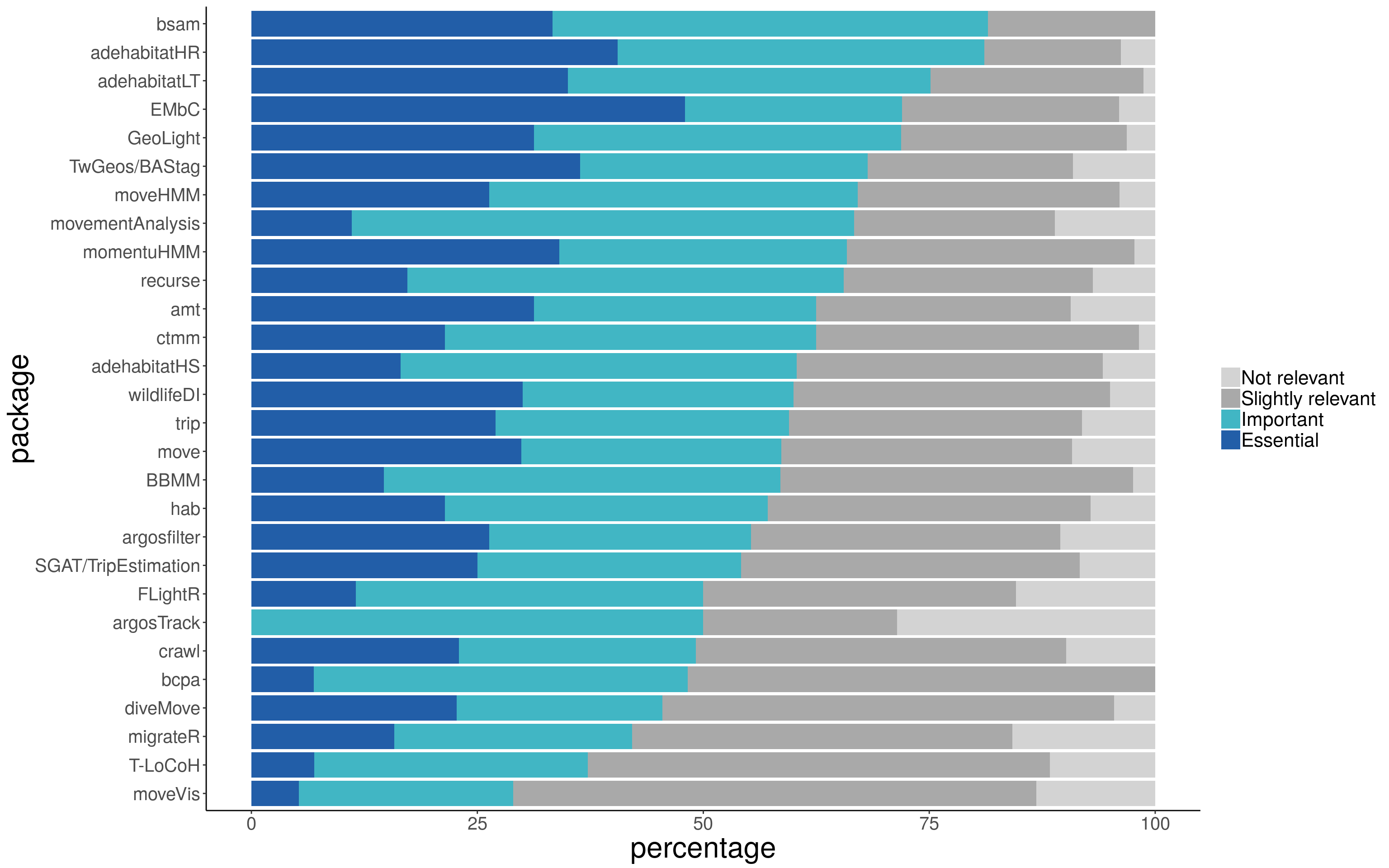

Participants were asked how relevant was each of the packages they use for their work. They had to answer using one of the following options:

- Not relevant: “I tried the package but really didn’t find it a good use for my work”

- Slightly relevant: “It helps in my work, but not for the core of it”

- Important: “It’s important for the core of my work, but if it didn’t exist, there are other packages or solutions to obtain something similar”

- Essential: “I wouldn’t have done the key part of my work without this package”

Figure 6: Bar plots of absolute frequency of each category of package relevance

The two barplots show the absolute and relative frequency of the answers for each package, respectively.

We identified the packages that were highly relevant for their users, considering only those packages with at least 10 responses. Among these 33 packages, three were regarded as either “Important” or “Essential” for more than 75% of their users: bsam (81.48%; 22), adehabitatHR (81.08%; 150), and adehabitatLT (75.16%; 118). bsam allows fitting Bayesian state-space models to animal tracking data.

Figure 7: Bar plots of relative frequency of each category of package relevance (for packages with more than 5 users)

If you want to check the numbers for your favorite package, the complete table is here.

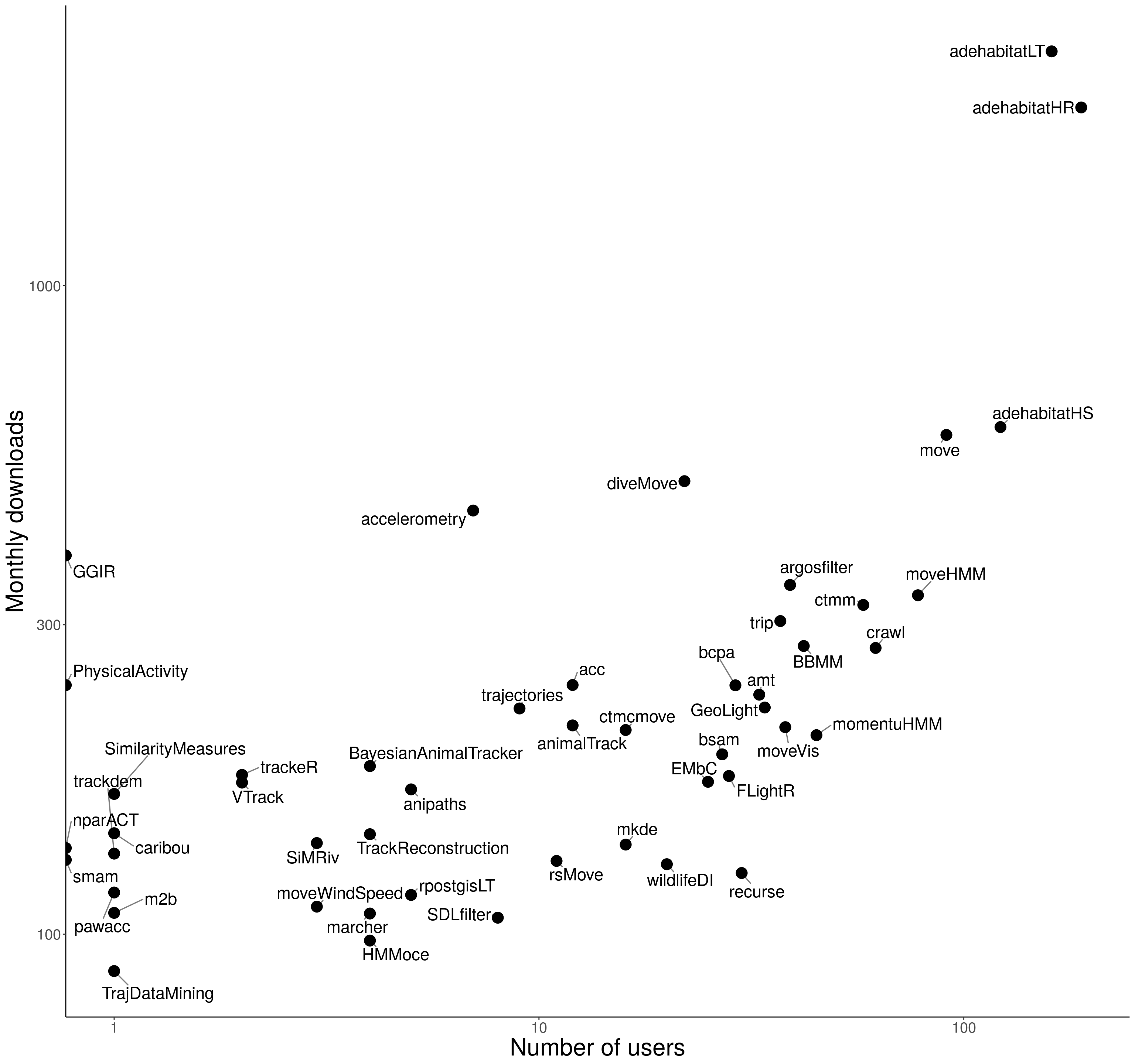

Survey representativity

To get a rough idea of how representative the survey was of the population of the package users, we compared the number of participants that used each package to the number of monthly downloads that each package has.

The number of downloads were calculated using the R package cran.stats. It calculates the number of independent downloads by each package (substracting downloads by dependencies) by day. It only works for downloads using Rstudio, and for packages on CRAN. So for the tracking packages on CRAN, we computed the average of downloads per month, from September 2017 to August 2018; less months were considered for packages that were less than one year old.

There is no perfect match between the number of users and the number of downloads per package, but a correlation 0.85 for the 49 packages on CRAN, provides evidence of an overall good representation of the users of tracking packages in the survey. Moreover, most of the packages with very few users in the survey regardless of their relatively high download statistics were accelerometry packages for human patients, which would be revealing that through Twitter and emails we did not reach that subpopulation of users.

A log-log plot for both metrics is shown in the figure below.

Summary

Most packages had very few users among the participants. The vast landscape of packages could be leading users to: 1) rely on the “old” packages (

adehabitat) that gather most functions for common analyses in movement and 2) search for other packages when doing other specific analyses. Moreover, many packages contain functions that other packages have implemented too (see more details in the review), so repetition could make users spread between packages.After the

adehabitatpackages, several packages for modeling animal movement (momentuHMM,moveHMM,crawlandctmm) showed to be very popular, which could be an indicator of an increase in research on movement models.Few of the packages had remarkably good documentation (>75% of “good” or “excellent” documentation), and, on the other end of the spectrum, a couple of packages got less than 50% of “good” or “excellent” rates.

Most packages were relevant for the work of their users, which is a positive feature!

More posts about R packages soon. Stay tuned!